Curious about Part 2 of this series? Here you go!

The past few years have been incredibly exciting for AI. Tech giants like Google, Microsoft, Amazon and IBM have been investing heavily in the technology and it’s evolving rapidly (that’s one of the key benefits of machine-learning after all!).

Today’s AI has been used to enhance search engines, self-driving cars, personal phone assistants - and yes, now DAM usability. But in order to make it one of the most time-saving additions to your DAM toolbox, it’s important to first understand what you can get out of it and the limitations of the technology.

That’s why we’d like to share 3 key lessons we’ve discovered whilst testing the most advanced image recognition tools available to DAM vendors today.

AI Lesson #1 - the technology is narrow, but it’s about to expand your metadata.

It may sound futuristic, but current AI technology is known to experts as ‘narrow AI’. Simply put, it means that it is designed only to perform limited tasks. Take for example a search engine algorithm or a self-driving car - the technology is used to achieve one clear task.

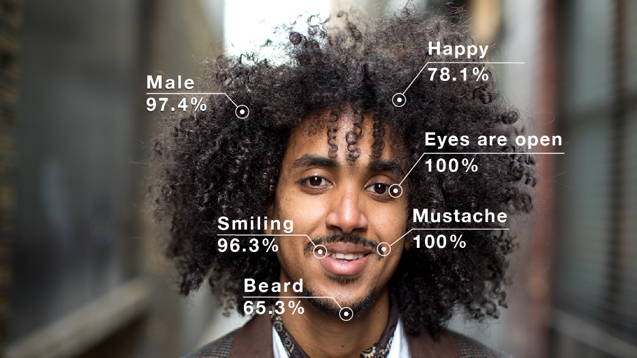

Facial analysis from Amazon Rekognition, one of the most advanced tools on the market (image courtesy of AWS).

When it comes to DAM, this task revolves around Image Recognition Technology. The technology can identify a host of criteria within every image uploaded - from landmarks to emotions on individual faces.

These criteria are ranked on a scale of accuracy as you see on the image above. Users are able to set the parameters - so if you want a lower volume of highly accurate characteristics, you might set an accuracy level of 90% or above.

The identified criteria can then automatically be added to an image’s metadata. This instantly builds a much larger and richer range of terms to help users search out the perfect asset.

AI Lesson #2 - don’t trust robots blindly.

As sophisticated as image recognition might appear or sound, it’s still in its infancy. It hasn’t yet matured (emotionally or analytically).

For example, we’ve observed that current software easily identifies positive emotions but struggles to detect negative ones. Beyond that, analysis can sometimes miss the mark completely - like identifying a man as a woman, or a lizard as a tree.

Fortunately, machine learning evolves very quickly. The more images analyzed, the more accurate and robust the results become. And as the big image-recognition providers analyze billions of images every day, they’re catching up very rapidly.

So whilst the technology may lighten the workload, the role of DAM administrators is as pivotal as ever to validate and enhance the metadata provided by the technology. And regardless of how sensitive the robots become, the relevance of attached metadata will always be defined by your end-user needs.

AI Lesson #3 - define your AI requirements before selecting a vendor.

When looking to acquire a DAM, the first part of the process is always to list the specific requirements and functionality required. Why? Because different product features help you to meet those exact needs.

It helps you to evaluate what will be most useful to you, without getting swept away in the dazzling sales presentation.

The same is true with AI vendors.

We’ve been testing the functionality of the three key players in Image Recognition AI - Google Vision, Amazon Rekognition and Clarifai. As you’d expect, they provide a lot of data that can be really useful to DAM users. But each has strengths in different areas.

Making sure you can make the most of this technology means considering how it can be used, practically, by the people who use your DAM every day. This way you can avoid getting swept up in the excitement of what the technology can do, rather than what will make it useful for you.

The best way to achieve this is to speak to your core users. Identify where the pain points are when searching and which searches they feel they’re losing most time on. From here, build a list of key criteria for selection:

- Is our image collection varied enough to put this tool to use?

- What are the main searches being conducted?

- Do we have different categories of images (people, places, buildings, objects)

- What kind of searches would make people’s lives easier (location-based, sentiment)

When you have a clear picture of what you’re looking for, it’s time to evaluate the tools.